原文始发于Javier Rando:The Worst (But Only) Claude 3 Tokenizer

Anthropic recently released Claude 3, a new family of large language models. However, they have not publicly released their tokenizer (yet?). But no worries! You can reverse-engineer the tokenizer by analyzing the generation streaming. Let me walk you through the reverse-engineering process.

Anthropic 最近发布了 Claude 3,这是一个新的大型语言模型系列。但是,他们还没有公开发布他们的分词器(还没有?但不用担心!您可以通过分析生成流对分词器进行逆向工程。让我引导您完成逆向工程过程。

💡 The idea is simple. Ask Claude to repeat some text and observe how the generation is streamed through the network. It turns out that Anthropic serves one token at a time!

💡 这个想法很简单。让克劳德重复一些文字,并观察这一代人是如何通过网络传输的。事实证明,Anthropic 一次只提供一个代币!

The reverse-engineering process

逆向工程过程

1. Naive idea: inspect the network traffic

1.幼稚的想法:检查网络流量

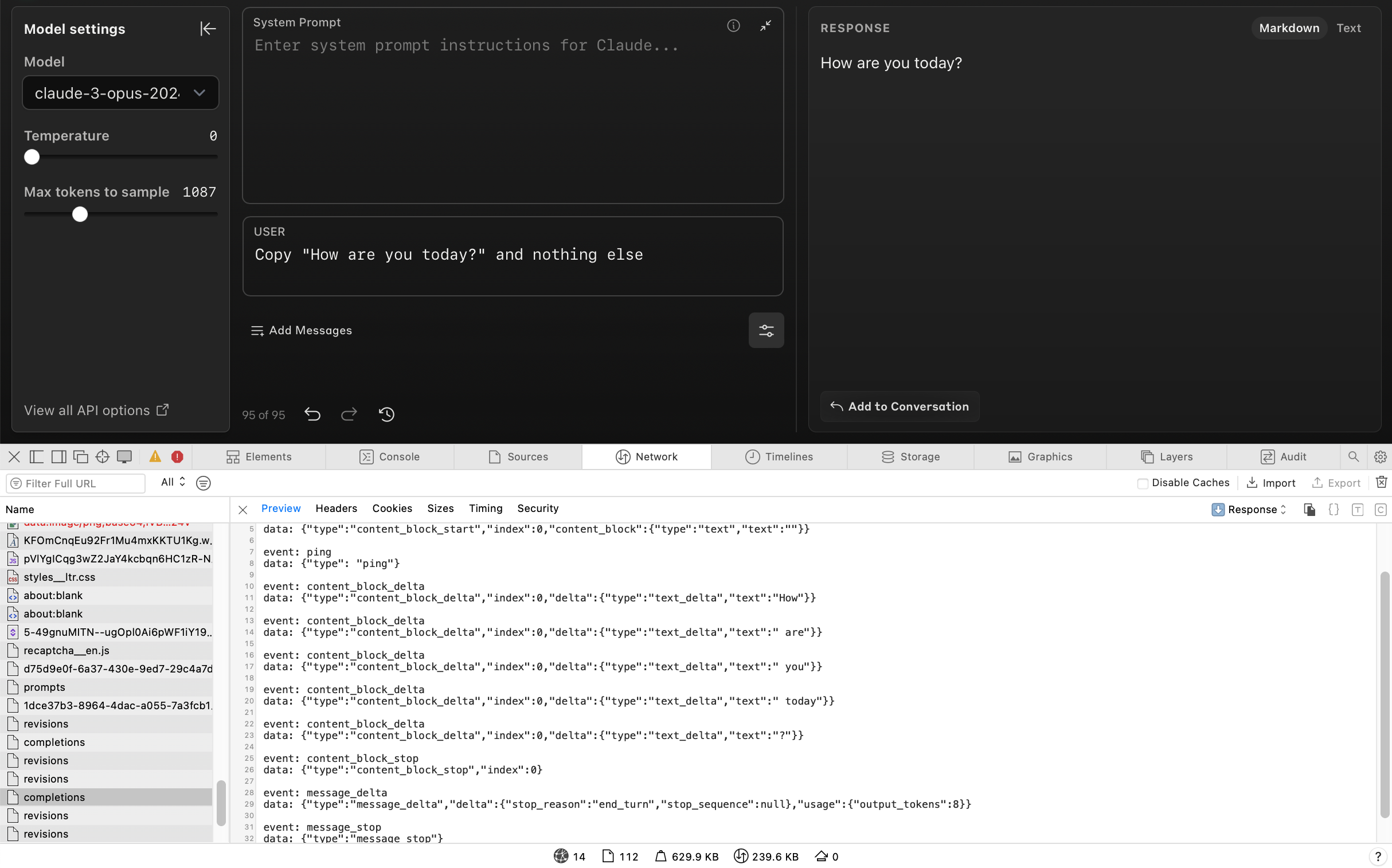

Can we figure out how specific strings are tokenized? As a first step, we inspected the network traffic after making a request to Claude through the Anthropic Workbench. The network traffic contains the detailed generation stream and some promising text deltas. Nevertheless, these deltas could simply be words (space separated), that not necessarily match the underlying tokenization.

我们能弄清楚特定字符串是如何标记的吗?作为第一步,我们在通过 Anthropic Workbench 向 Claude 发出请求后检查了网络流量。网络流量包含详细的生成流和一些有前途的文本增量。然而,这些增量可能只是单词(空格分隔),不一定与底层标记化匹配。

通过 Claude 工作台请求后的网络流量检查。

2. Verify the stream are not simply words

2. 验证流不是简单的单词

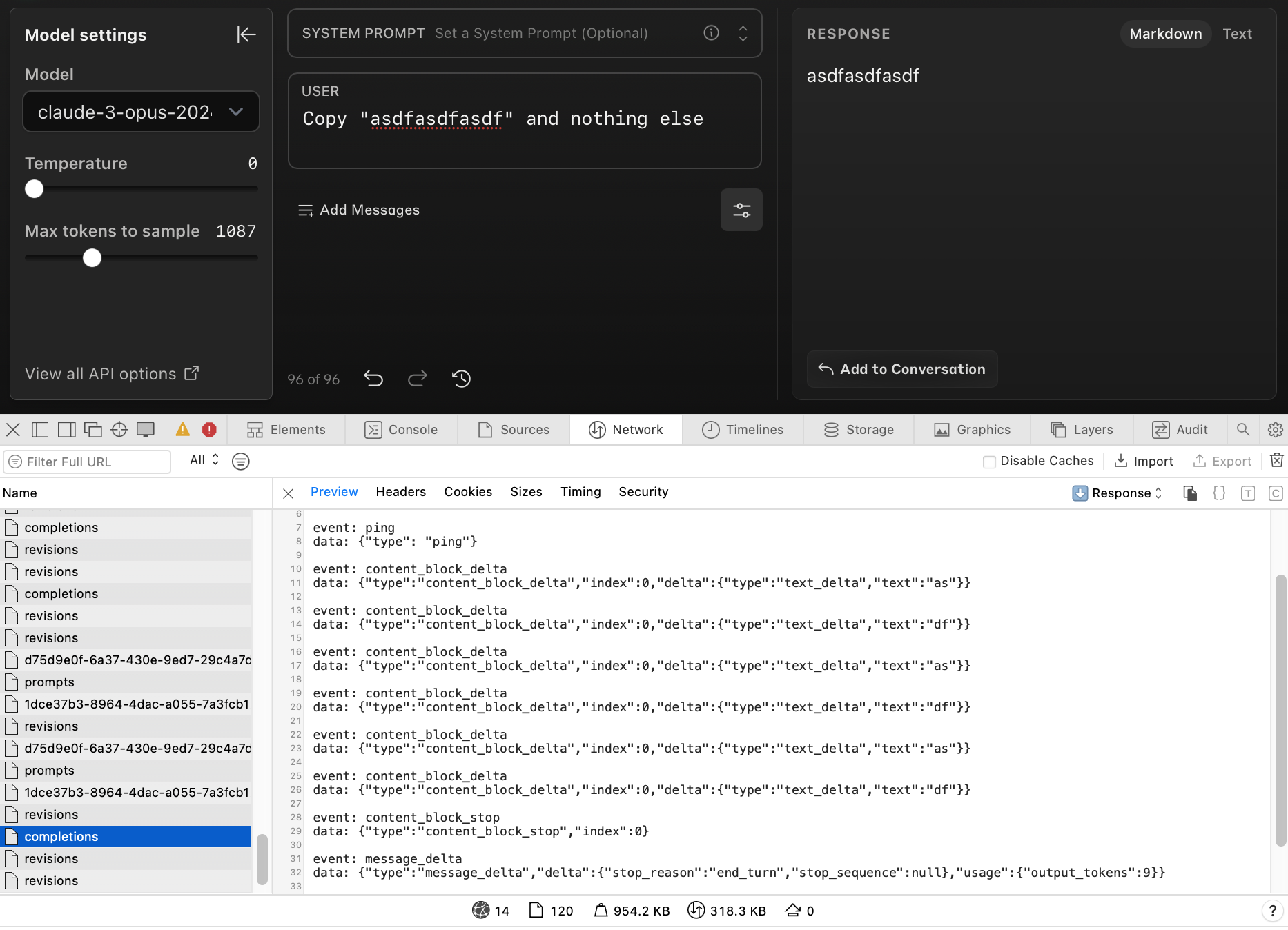

We want to inspect the traffic when Claude generates a string that we are somehow confident has more than one token. How can we do this? We simply ask Claude to repeat a string. We chose “asdfasdfasdf” and checked that OpenAI tokenizer returned >1 tokens.

当 Claude 生成一个字符串时,我们希望检查流量,我们以某种方式确信该字符串具有多个令牌。我们怎样才能做到这一点?我们只需让克劳德重复一个字符串。我们选择了“asdfasdfasdf”,并检查了 OpenAI 分词器是否返回了 >1 个令牌。

We ask Claude to repeat this string and inspect the network traffic as before. We find that the text deltas are small pieces of the string, so these are not space-separated words. But, are they tokens?

我们要求 Claude 重复此字符串并像以前一样检查网络流量。我们发现文本增量是字符串的小块,因此它们不是空格分隔的单词。但是,它们是代币吗?

要求 Claude 重复字符串“asdfasdfasdf”后的网络流量检查。

3. Check if the stream chunks are actual tokens

3. 检查流块是否是实际的代币

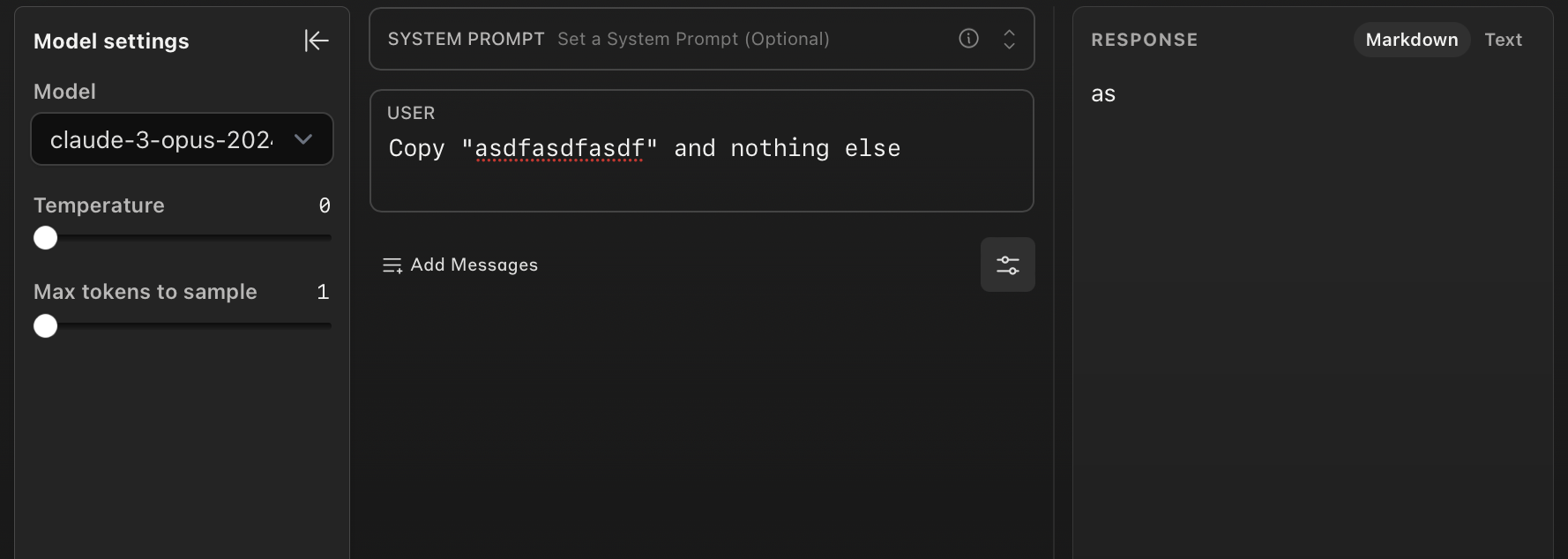

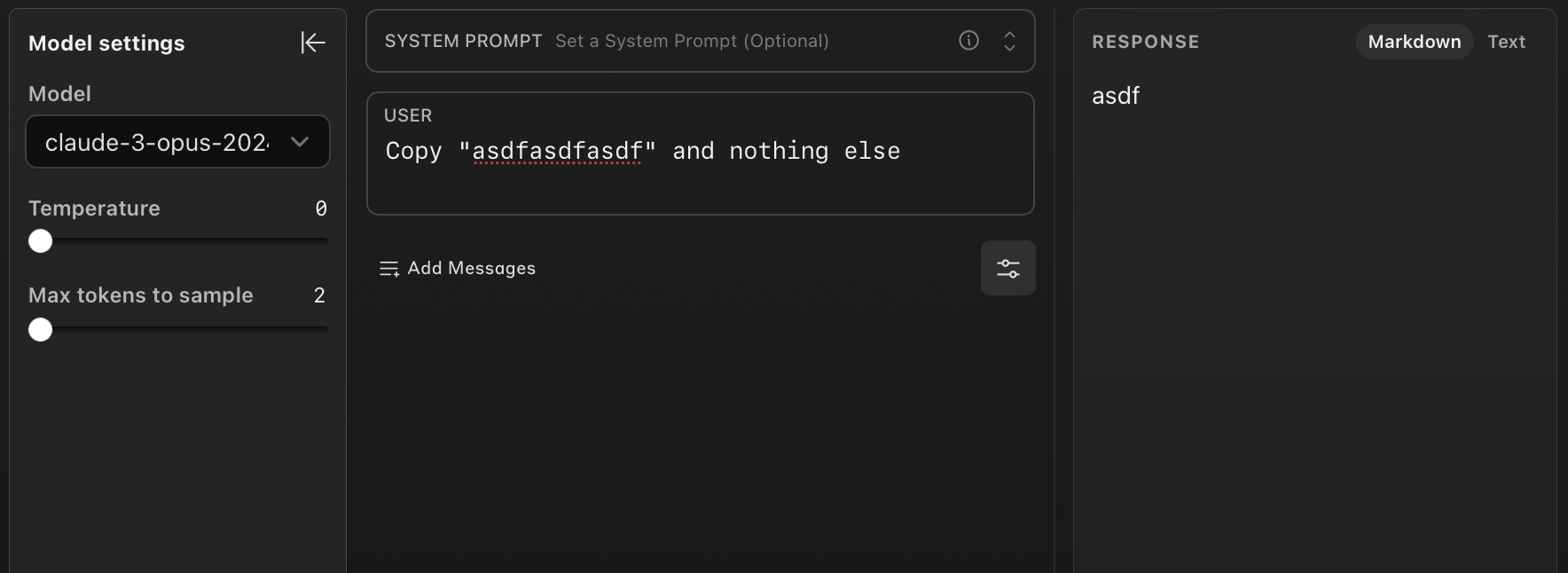

Claude Workbench allows you to limit the number of maximum tokens to generate. We repeat the previous experiment and limit the number of output characters to 1 and 2, respectively.Teh generations are “as” (1 token) and “asdf” (2 tokens). This is a strong indication that the text deltas are actual tokens. This worked for several experiments.

Claude Workbench 允许您限制要生成的最大令牌数量。我们重复前面的实验,并将输出字符数分别限制为 1 和 2。Teh 世代是“as”(1 个代币)和“asdf”(2 个代币)。这强烈表明文本增量是实际的标记。这适用于几个实验。

We also verify that the number of tokens obtained with our method matches those reported in the API usage. We find that the number of tokens reported by the API is always number of text tokens + 3. This may include start and end of sentence tokens.

我们还验证使用我们的方法获得的令牌数量是否与 API 使用情况中报告的令牌数量相匹配。我们发现 API 上报的令牌数量始终 number of text tokens + 3 是 。这可能包括句子的开始和结束标记。

将令牌数量限制为 1 和 2 时的世代。

4. Write a nicer prompt and implement with Python

4. 编写更好的提示并使用 Python 实现

We look for a generic prompt that successfully instructs the model to copy only the text to be tokenized. We also implement our tokenization using the streaming functionalities in the official Anthropic Python library. You can find the resulting implementation here. We consider this “tokenizer” does not expose any propietary information. If your usage of this tool falls within Anthropic’s Responsible Disclosure Policy, we encourage you to follow their protocols.

我们寻找一个通用提示,该提示成功指示模型仅复制要标记的文本。我们还使用官方 Anthropic Python 库中的流式处理功能实现标记化。您可以在此处找到生成的实现。我们认为这种“标记化器”不会暴露任何专有信息。如果您对本工具的使用符合 Anthropic 的负责任披露政策,我们鼓励您遵守他们的协议。

Store and share your reverse-engineered vocabulary: The tokenizer will save locally all the tokens you extract from your text. You can share these with us, and we will maintain a joint vocabulary by merging everyone’s knowledge.

存储和共享您的逆向工程词汇表:分词器将在本地保存您从文本中提取的所有令牌。您可以与我们分享这些内容,我们将通过合并每个人的知识来保持联合词汇表。

The result: the worst (but only) Claude 3 tokenizer

结果:最差(但唯一)Claude 3 分词器

This is probably the least efficient implementation of a tokenizer (but it is also the only publicly available one that we know of!). This may be useful for experiments where tokenization plays an important role and spending some tokens is not a problem. It is unclear how faithful this tokenization will be, but our experiments suggest this is very likely a close approximation.

这可能是分词器效率最低的实现(但它也是我们所知道的唯一公开可用的实现!这对于代币化起着重要作用并且花费一些代币不是问题的实验可能很有用。目前尚不清楚这种标记化的忠实度如何,但我们的实验表明这很可能是一个近似值。

Hope you find these experiments insightful!

希望你觉得这些实验很有见地!